Embedded Home Surveillance System with Person and Object Detection (August 2025)

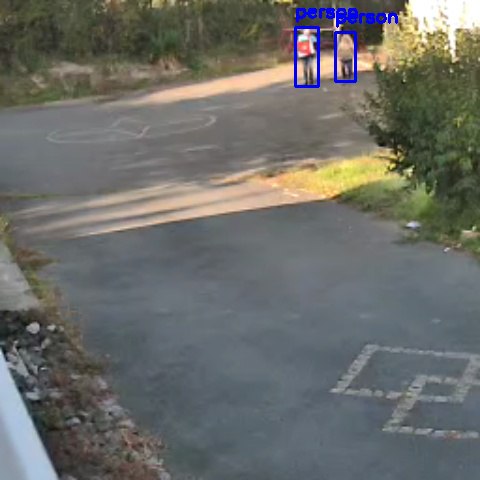

Stream acquisition, processing, and use of motion detection followed by object detection (YOLO) on an embedded system. Alert System.

Building a software. Python (FastAPI), AI, Postgres SQL, NodeJS...

Stream acquisition, processing, and use of motion detection followed by object detection (YOLO) on an embedded system. Alert System.

Deep learning model for understanding the layout and structure of old newspaper images. (2024-2025)

Link to poster: Poster Tiramisu

Code available soon

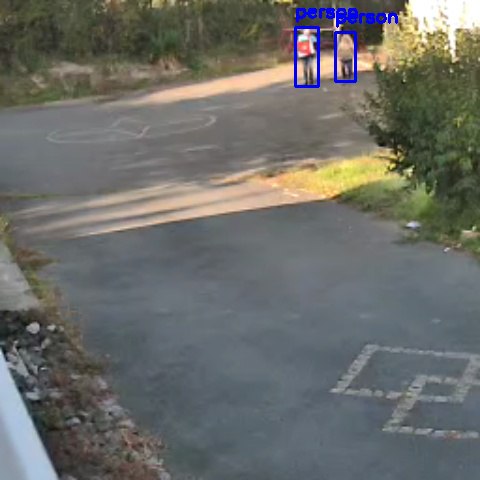

Description: Project related to the paper "Early Action Detection at instance-level driven by a controlled CTC-Based Approach" (Submitted to TPAMI in 2023). Developed in Python (Tensorflow 2).

Link: Code

Link: Code

Description: The framework evaluates the performance of a model for online action recognition in videos. It includes the evaluation with various metrics and supports multi-fold evaluation. Developed in Python. Code available soon.

Link: Code

Description: This project implement the paper "Online Human Action Detection Using Joint Classification-Regression Recurrent Neural Networks" and "Multi-Modality Multi-Task Recurrent Neural Network for Online Action Detection". Developed in Python (Tensorflow 2).

Description: Project related to the paper "Early Recognition of Untrimmed Handwritten Gestures with Spatio-Temporal 3D CNN." (ICPR 2022) Developed in Python (Tensorflow 2).

Link: Code

Description: Project related to my paper "Online Spatio-Temporal 3D Convolutional Neural Network for Early Recognition of Handwritten Gestures." (ICDAR 2021) Developed in Python (Tensorflow 2).

Link: Code

Description: This project implement the paper "Skeleton-Based Online Action Prediction Using Scale Selection Network". Developed in Python (Tensorflow 2). Code available soon.

Description: This software allows to visualize in 3D the sequence of gestures, multi-view voxelization, ground truth, video RGB. Developed in Unity (C#).

Description: The goal of this study is to use Machine Learning algorithms for predicting the unique emoji associated with a tweet. We applied various text pre-processing methods to tweet text and experimented with different algorithms to produce the best prediction models.

Code: View Code

Report: View Report

Project Link: DERG3D Project

Description: Started during a project at INSA, I updated it during my PhD for the practical work of the "AMRG" module in the 5th year at INSA.

Duration: May 2019 - June 2019

Associated with VR2Planets

Description: Test of different interaction techniques with a marker (creation, movement, deletion) in a virtual environment, in VR.

Duration: Jan 2019 - Apr 2019

Description: Fourth-year module project focused on shape recognition. Created a complete chain for symbol recognition, including preprocessing, classification, and a demo application.

Duration: June 2018 - August 2018

Description: Developed an application to find products in a supermarket with an optimal path.

Description: Developed a small Android game for the launch of the film "La Bataille pour les Deux-Alpes" produced by a friend.

Google Play LinkDuration: Feb 2018 - May 2018

Description: Implemented AI for the game Mario using the A* algorithm to autonomously navigate levels.

Duration: Sept 2016 - April 2017

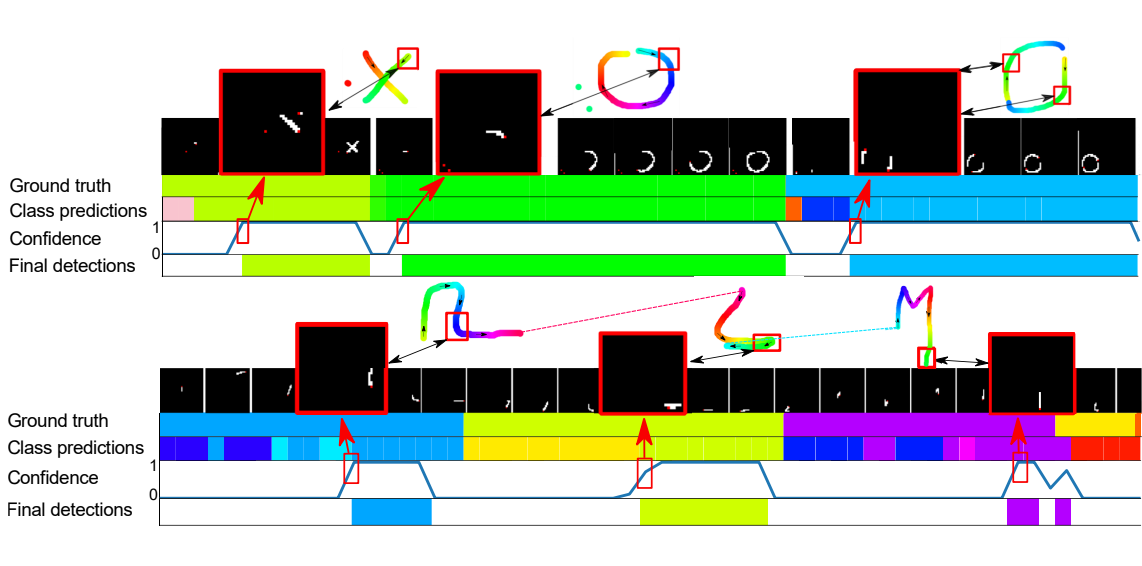

Description: Developed in 2017, this project was a grade management system that allowed teachers to organize, visualize grades, and statistics. For students, it provided a way to track their grades. Web developpement (Php, JS, SQL, html/css)

Duration: Sept 2022 - March 2023

Description: Stay In Motion aims to address the issue of physical inactivity among young people. The project involves creating an application where users can engage in sports sessions in a virtual environment, using real-time artificial intelligence to enhance the interaction.

Link: Stay In Motion Project

Duration: Sept 2021 - May 2022

Description: This project targets the lack of physical activity among young people by developing an application that combines physical activity sessions with a digital world, offering live feedback and guidance to users.

Link: Move On Progress Project

Duration: Sept 2020 - May 2021

Description: The R3G project supports research on early recognition of gestures in real-time. It involves the creation of a software suite to assist researchers in developing 2D and 3D gesture recognition engines.